The Problem

Recently i had to migrate a bunch of files from one VmWare Hypervisor to another one. It turned out that there are multiple ways how to do this. The setup is as follows: 2 Computers - both of them are running VmWare ESXi vSphere Hypervisor. The are connected to the same LAN via a 1 Gigabit connenction.

Solutions

As stated before - there are multiple ways how to copy data between those two machines. In this article i am focusing on techniques that do not require thrid party software to perform this task. The following sections are describing my attempts to copy an ISO image from one Host (192.168.30.3) to another host (192.168.30.79). All of these attempts are solving the problem - but the performance is different. All tests where performed on two similar machines with no additional load on them.

To be able to reproduce these steps you have to turn on the ESXi Shell on the hypervisor to get access via SSH. Then you can access the consoile via Putty in WIndows or ssh under Linux.

Using keyboard-interactive authentication.

Password:

The time and date of this login have been sent to the system logs.

VMware offers supported, powerful system administration tools. Please

see www.vmware.com/go/sysadmintools for details.

The ESXi Shell can be disabled by an administrative user. See the

vSphere Security documentation for more information.

~ #

Copy via SSH / SCP

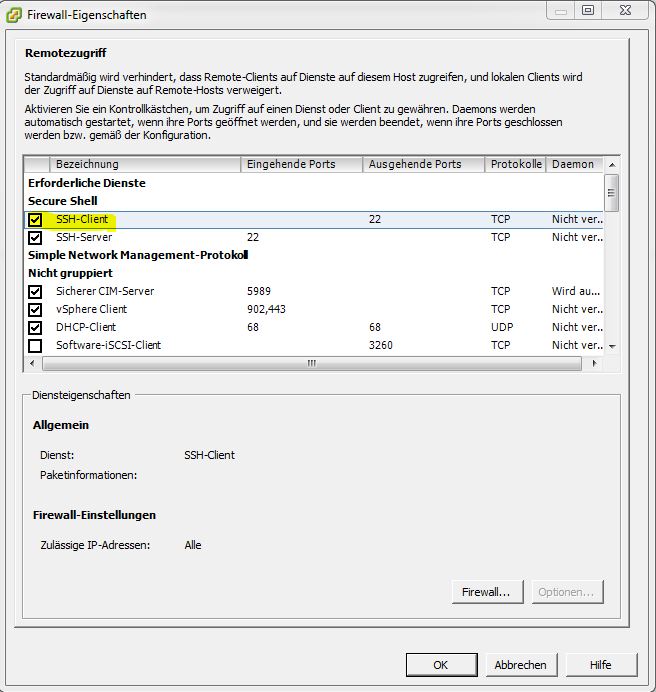

The most obvious solution is to copy the data via SCP from one machine to another. Even if you have turned on the ESXi shell you will discover taht a simple copy operation is not possible because the firewall blocks outgoing connections on port 22. So the forst step is to enable the SSH client in the firewall:

Once you have done this you can connect to the other machine via SSH/SCP and transfer some files:

So lets try to copy a 468 MB file from the source to the target:

Password:

acp_systemcd.iso 100% 468MB 6.4MB/s 01:13

We see that it takes 1:13 to copy 468 MB of data. Since this is not very fast i tried to copy it into the opposite direction - instead of sucking it from the source i wanted to push it onto the target:

The authenticity of host '192.168.30.79 (192.168.30.79)' can't be established.

RSA key fingerprint is 50:de:1e:46:b6:5f:30:7e:31:51:66:00:d7:59:b5:f8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.30.79' (RSA) to the list of known hosts.

Password:

acp_systemcd.iso 100% 468MB 5.8MB/s 01:21

This also wasn't as fast as i would have expected it to be - the machines are connected with a 1 GBit connection! Then i had the following idea: Lets send the data compressed via scp - this should speed up the effective datatransfer a little bit. This time t tried to send a 3GB file over the wire. The compression is done on-the-fly ba adding the -C flag to the scp command.

Password:

Server2008R2_Eval.iso 100% 3053MB 3.6MB/s 14:02

WHOW! The transfer rate dropped to 4.16 MB/sek. OK my next guess was that the system is some kind of busy but since there where no VMs running this could not be the case. So if the machine is busy then everything should be slow ... so lets copy the same file again but without compression.

Password:

Server2008R2_Eval.iso 100% 3053MB 7.3MB/s 06:58

... and again i was disappointed (or confused). After a cup of coffe and several attempts later I came to the following conclusion:

Copy via netcat

Some internet forums indicated that VmWare intentionally slows down scp operations on the admin-shell. Well - there are other possibilities how to get your data shipped. One of the most famous tools among hackers and system administrators is netcat - often reffered as the swiss army knife of networking. Fortunately netcat is installed out of the box on the ESXi hosts. So lets try to move the data by running netcat as a server on the destination machine. The tricky part was to find a port that could be used without the need to manipulate the firewall settings. I have coosen the port 8000 which is (according to a VMware Knowledgebase article) reserved for the vMotion service. Since i am not aware of the fact that my hosts are doing anything with vMotion this seemed to be a valuable choice.

On the receiving host i fired up netcat as a server that listens on port 8000 and writes everything it gets into the file acp_test_netcat.iso

On the sending host i fired up netcat as a client that connects to the server and transmits everything within the file acp_systemcd.iso

It took 213 seconds to copy 467 MB of data which means that netcat reaches 2.19 MB / sec. Since netcat utilizes neither a protocol to transfer the data nor a compression my (and the assertions of the internet forums) are confirmed: Some things are intentionally slowed down within the VMware ESXi Shell.

Copy via Python & wget

OK now it was time to figure out which programs are not crippled. Since VMware offers features to move data between two hosts i assumed that they slowed down everything in the standard shell that could be used for this purpose. To proove my theory I tried to to something that was not that usual: I searched for a way to write a server / client by myself. Then i stumbled over python. Python is a general purpose scripting language with a large standard library that usually enables you to run a webserver almost out of the box.

The other reasons to choose a python where:

- It is a general purpuse language - So it is not that easy to cripple a certain part of it (lets say limit network speed) because before you run a script the python interpreter has no idea what you are gonna do.

- It is used by VMware itself - So why would they taint their own tools.

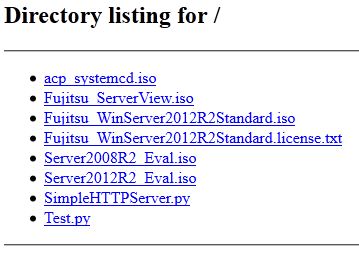

Python 2.6 is installed out-of-the-box. So my idea was to use SimpleHTTPServer as a simple webserver on the senders side and thre preinstalled wget as http client on the receiving host. For all of you who are not aware of what SimpleHTTPServer does: This python class serves files from the current directory and below, directly mapping the directory structure to HTTP requests.

Unfortunately this class was not installed on my machines - so i had to download it befor i could start the server:

/vmfs/volumes/53ce71c4-5f426b5e-94a4-901b0e307310/iso-images # python -m SimpleHTTPServer

Serving HTTP on 0.0.0.0 port 8000 ...

A first attempt to access the python webserver with a standard browser showed me this beautiful picture:

SUCCESS - Now i just had to download the files on the client:

Connecting to 192.168.30.3:8000 (192.168.30.3:8000)

acp_systemcd.iso 100% |*******************************| 467M 0:00:00 ETA

BIG SUCCESS: The entirely uncompressed download of 2 files lead to the following results:

| Size | Time | Throughput |

|---|---|---|

| 467 MB | 1m:02s | 6.22 MB/s |

| 3 GB | 6m:45s | 7.58 MB/s |

| ~20 GB | ~36m | 9.25 MB/s |

Copy via NFS-Share

As last attempt i tried to move the data from one host to the other by intermediately copying it to a NFS share (which is of course also connected via 1GBit to the hosts). In this scenario i connected an addtional datastore to the hosts. From previous experiments with VMware i remembered that a VM is able to run from a connected NFS storege. Of couese it is not as fast as it is from local disks but since the difference was not too big i hoped that NFS is a way to get my data transferred rather quickly. I created the new NFS share on a buffallo TerraStation and connected it via the management GUI to both VMWare systems. Once the additional datastorage is recognised by the Hypervisor it is automatically mounted into the /vmfs/volumes directory. In my case the volume was called Test.

2187187c-a7760563-fab1-163290c0c43f 5ebabd7d-d5b1bb03-41bf-0a54d491997a

53ce71b8-524a85a0-b04a-901b0e307310 Test

53ce71c4-5f426b5e-94a4-901b0e307310 b890daea-77712a93

53ce71c9-ae797f15-cf51-901b0e307310 local_datastorage

53d113eb-62b09240-52c6-901b0e307310 local_systemstorage

/vmfs/volumes # cp local_systemstorage/iso-images/Server2008R2_Eval.iso Test/

I copied again a 3GB file from the local storage onto the mounted NFS share and it took 13 minutes to complete. This means that the transferspeed is about 3.9MB/sec, but this ist just the first half of the transfer. If you take into consideration that you have to copy the file from the NFS share to the target computer to complete this task then the effective transfer speed is about 1.85 MB/sec.

Summary

Especially when i copied small files via scp i discovered peaks in the speed up to 30 MB/s.

But these speeds usually disappeared within a few seconds and then the data transfer rate settled at about 7.1 MB/s (I copied 100GB of data with scp in 4h:5m which translates into an average speed of about 7.1 MB/s.)

I have never encountered average speeds above 10.5 Mb/s. So where is this (intentionally placed?) bottleneck within the vSphere hypervisor? It seems that the bottleneck is located somewhere around the disk or within the disk-scheduler because if you just compress files on the local disk then the speed is also around 10 MB/s. To be more precise: the decompression of a 22.5GB tar archive (compressed with tar -xzf) took around 45 minutes which translates into a speed of ~8.5 MB/sec. The compression was sligtly faster - there i got a speed of about 10MB/sec. From a hypervisors point of view this would make sense because it should be first priority to serve the VMs and the maintainance jobs are normally not as important than running systems.

However: I was not able to demystify how exactly VMware slows down the internal tools but (for me) i confirmed the theory that some tools that are intentionally slowed down. I finally copied my data with uncompressed scp because speed was not the only issue. SCP encrypts the data that is sent over the wire.